Description

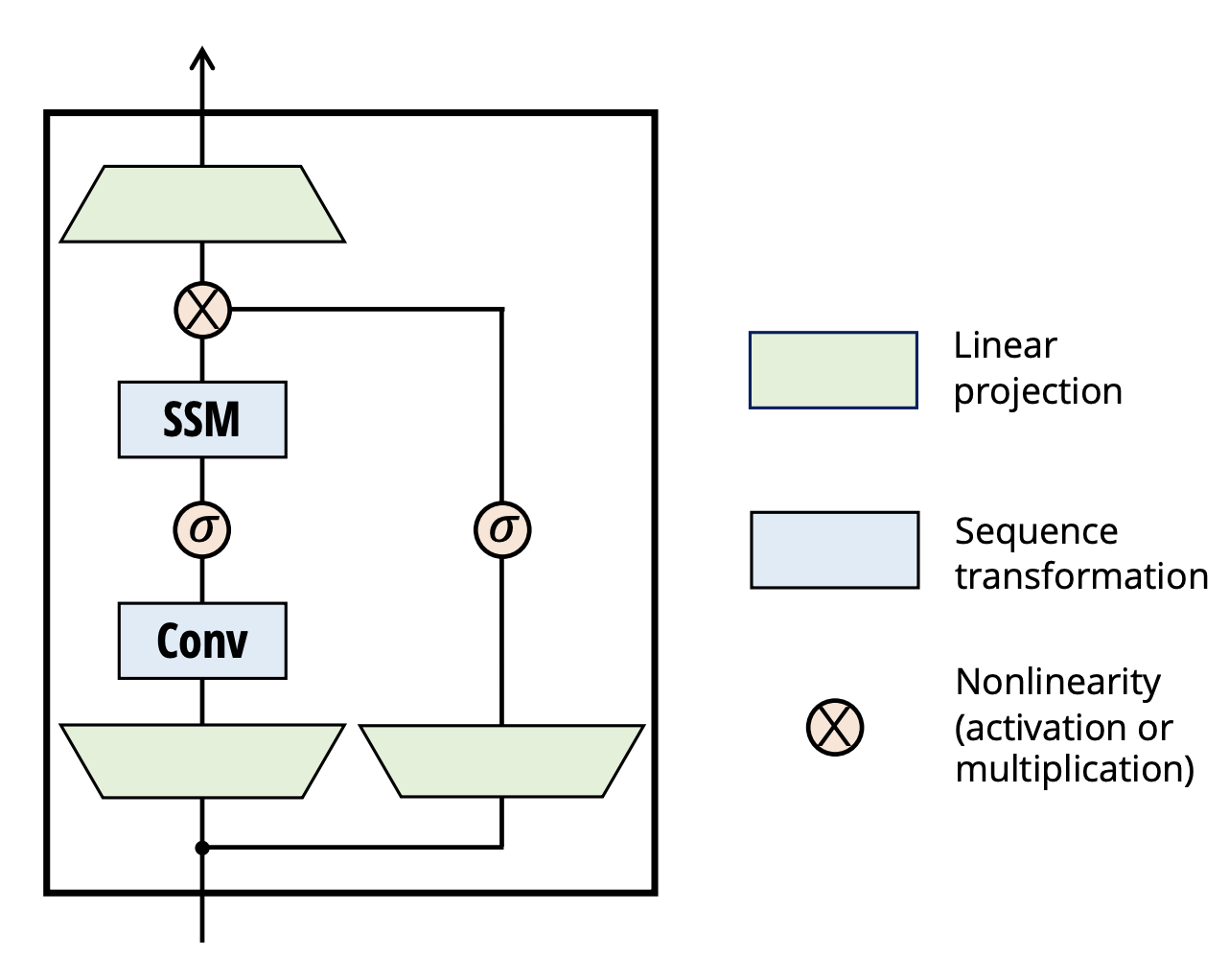

The hateful memes challenge is a multimodal classification task posed by FAIR that requires models to detect hate speech by understanding subtle relationships between the captions and images of memes. Mamba is a state-space model (SSM) that processes sequences using a selective scan mechanism that purports to be more efficient than transformers. In this paper, we implement multiple variations of Mamba-based architectures and apply them to the hateful memes dataset. Our experiments show that Mamba generally performs worse than transformer-based architectures at the task, in line with later works that confirm transformer dominance over state-space models. This was completed as the final project for Georgia Tech’s CS 7643: Deep Learning course.

Aryan Mittal

Aryan Mittal